News

Looking Back in Time

Published August 16, 2007

|

|

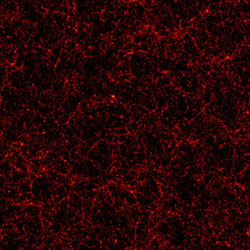

To help understand these observations, UC San Diego cosmologist Michael Norman and collaborators are using the ENZO cosmology code to simulate the universe from first principles, starting near the Big Bang. In work submitted to the Astrophysical Journal, the researchers have conducted the most detailed simulations ever of a region of the universe 500 megaparsecs across (more than 1.5 billion light years).

The size and detail of their results will be useful to other researchers involved in spatial mapping and simulated sky surveys, shedding light on the underlying physical processes at work. But to keep the model faithful to reality, the researchers need to represent the extreme variability of matter as it coalesces under gravity, becoming many orders of magnitude more dense in local areas.

"We need to zoom in on these dense regions to capture the key physical processes -- including gravitation, flows of normal and 'dark' matter, and shock heating and radiative cooling of the gas," said Norman. "This requires ENZO's 'adaptive mesh refinement' capability."

Adaptive mesh refinement (AMR) codes begin with a coarse grid spacing, and then spawn more detailed (and more computationally demanding) subgrids as needed to track key processes in higher density regions.

"We achieved unprecedented detail by reaching seven levels of subgrids throughout the survey volume -- something never done before -- producing more than 400,000 subgrids, which we could only do thanks to the two large-memory TeraGrid systems," said SDSC computational scientist Robert Harkness, who carried out the runs with astrophysicist Brian O'Shea of Los Alamos National Laboratory.

Running the code for a total of about 500,000 processor hours, the researchers used 2 TB of memory (around 2,000 times the memory of a typical laptop) on the IBM DataStar at SDSC and 1.5 TB of shared memory on the SGI Altix Cobalt system at NCSA. To achieve these computations, an ASTA collaboration between Harkness and Norman's group made major improvements in scaling and efficiency of the code.

The simulations generated some eight terabytes of data. The Hierarchical Data Format (HDF5) group at NCSA provided important support for handling the output, and SDSC's robust data storage environment allowed the researchers to efficiently store and manage the massive data.

Reference

Hallman, E., O'Shea, B., Burns, J., Norman, M., Harkness, R., Wagner, R., The Santa Fe Light Cone Simulation Project: I. Confusion and the WHIM in Upcoming Sunyaev-Zel'dovich Effect Surveys,

Astrophysical Journal, submitted.