News

StarGate Demo at SC09 Shows How to Keep Astrophysics Data Out of Archival "Black Holes"

Published November 19, 2009

|

But as a leading expert in the field of astrophysics, he sees data as intellectual property that belongs to the researcher and his or her home institution - not the center where the data was computed. Some people, Norman says, claim that it's impossible to move those terabytes of data between computing centers and where the researcher sits. But in a live demo in which data was streamed over a reserved 10-gigabits-per-second provided by the Department of Energy's ESnet (Energy Sciences Network), Norman and his graduate assistant Rick Wagner showed it can be done.

While the scientific results of the project are important, the success in building reliable high-bandwidth connections linking key research facilities and institutions addresses a problem facing many science communities.

"A lot of researchers stand to benefit from this successful demonstration," said Eli Dart, an ESnet engineer who helped the team achieve the necessary network performance. "While the science itself is very important in its own right, the ability to link multiple institutions in this way really paves the way for other scientists to use these tools more easily in the future."

"This couldn't have been done without ESnet," Wagner said. Two aspects of the network came into play. First, ESnet operates the circuit-oriented Science Data Network, which provides dedicated bandwidth for moving large datasets. However, with numerous projects filling the network much of the time for other demos and competitions at SC09, Norman and Wagner took advantage of OSCARS, ESnet's On-Demand Secure Circuit and Advance Reservation System.

"We gave them the bandwidth they needed, when they needed it," said ESnet engineer Evangelos Chaniotakis. The San Diego team was given two two-hour bandwidth reservations on both Tuesday, Nov. 17, and Thursday, Nov. 19. Chaniotakis set up the reservations, then the network automatically reconfigured itself once the window closed.

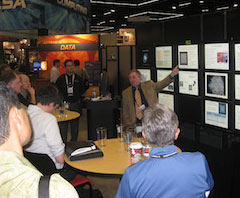

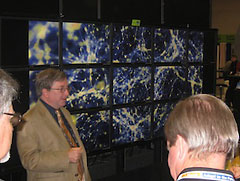

At the SDSC booth, the live streaming of the data drew a standing-room-only crowd as the data was first shown as a 4,096^3 cube containing 64 billion particles and cells. But Norman pointed out that the milky white cube was far too complex to absorb, then added that it was only one of numerous time-steps. In all, the data required for rendering came to about 150 terabytes of data.

cmid="News:article">

|

The project, Norman explained, is aimed at determining whether the signal of faint ripples in the universe known as baryon acoustic oscillations, or BAO, can actually be observed in the absorption of light by the intergalactic gas. It can, according to research led by Norman, who said they were the first to determine this. Such a finding is critical to the success of a dark energy survey known as BOSS, the Baryon Oscillation Spectroscopic Survey. The results of his proof-of-concept project, Norman said, "ensure that BOSS is not a waste of time."

Creating a simulation of this size, even using the petaflops Cray XT5 Kraken system at the University of Tennessee can take three months to complete as it is run in batches as time is allocated, Norman said. The data could then be moved in three nights to Argonne for rendering. The images were then streamed to the SDSC OptiPortal for display.. Norman said the next step is to close the loop between the client side and the server side to allow interactive use. But the hard work - connecting the resources with adequate bandwidth - has been done, as evidenced by the demo, he noted.

But it wasn't just an issue of bandwidth, according to ESnet's Dart. "We did a lot of testing and tuning," said Dart. ESnet is managed by Lawrence Berkeley National Laboratory (LBNL).

Other contributors to the demo were Joe Insley of Argonne National Laboratory (ANL), who generated the images from the data, and Eric Olson, also of Argonne, who was responsible for the composition and imaging software. Network engineers Linda Winkler and Loren Wilson of ANL and Thomas Hutton of SDSC worked to set up and tune the network and servers before moving the demonstration to SC09. The project was a collaboration between ANL, CalIT2, ESnet/LBNL, the National Institute for Computational Science, Oak Ridge National Laboratory and SDSC.