News

SDSC Leads Supercomputing Efforts in Creating Largest-Ever Earthquake Simulation

Published August 18, 2010

Seismologists have long been asking not if, but when 'The Big One' will strike southern California. Just how big will it be, and how will the amount of shaking vary throughout the region? Now we may be much closer to finding out the answer to at least the latter part of that question, and help prepare the Golden State's emergency response teams to better cope with such a potential disaster.

|

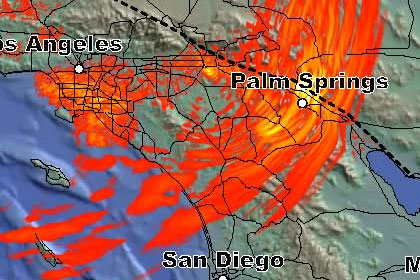

[Click image to view a streaming video clip or download hi-res video (140MB)] Snapshot of the ground motion 1 min 52 s after the Magnitude 8 simulation has initiated on the San Andreas fault near Parkfield (northern terminus of the dashed line). The waves are still shaking in the Ventura, Los Angeles, and San Bernardino areas (due to reverberations in their underlying soft sedimentary basins) while the rupture is still in progress, and the wave fronts are rapidly approaching San Diego. Image by Amit Chourasia, San Diego Supercomputer Center, UC San Diego. |

Researchers at the San Diego Supercomputer Center (SDSC) at the University of California, San Diego and San Diego State University (SDSU) have created the largest-ever earthquake simulation, for a Magnitude 8.0 (M8) rupture of the entire southern San Andreas fault. About 25 million people reside in that area, which extends as far south as Yuma, Arizona, and Ensenada, Mexico, and runs up through southern California to as far north as Fresno.

SDSC provided the high-performance computing (HPC) and scientific visualization expertise for the simulation, while the Southern California Earthquake Center (SCEC) at the University of Southern California (USC) was the lead coordinator in the project. The scientific details of the earthquake source were handled by researchers at San Diego State University (SDSU), and the Ohio State University (OSU) was also part of the collaborative effort to improve the software efficiency.

The research was selected as a finalist for the Gordon Bell prize, awarded annually for outstanding achievement in high-performance computing applications at the annual Supercomputing Conference. This year's conference, called SC10 (Supercomputing 2010) will be held November 13-19 in New Orleans, Louisiana.

"This M8 simulation represents a milestone calculation, a breakthrough in seismology both in terms of computational size and scalability," said Yifeng Cui, a computational scientist at SDSC and lead author of Scalable Earthquake Simulation on Petascale Supercomputers. "It's also the largest and most detailed simulation of a major earthquake ever performed in terms of floating point operations, and opens up new territory for earthquake science and engineering with the goal of reducing the potential for loss of life and property."

The simulation, funded through a number of National Science Foundation (NSF) grants, represents the latest in seismic science on several levels, as well as for computations at the petascale level, which refers to supercomputers capable of more than one quadrillion floating point operations, or calculations, per second.

"The scientific results of this massive simulation are very interesting, and its level of detail has allowed us to observe things that we were not able to see in the past," said Kim Olsen, professor of geological sciences at SDSU, and lead seismologist of the study. "For example, the simulation has allowed us to gain more accurate insight into the nature of the shaking expected from a large earthquake on the San Andreas Fault."

Olsen, who cautioned that this massive simulation is just one of many possible scenarios that could actually occur, also noted that high-rise buildings are more susceptible to the low-frequency, or a roller-coaster-like motion, while the smaller structures usually suffer more damage from the higher-frequency shaking, which feels more like a series of sudden jolts.

As a follow-on to the record-setting simulation, Olsen said the research team plans later this year to analyze potential damage to buildings, including Los Angeles high-rises, due to the simulated ground motions.

Record-setting on several fronts

"We have come a long way in just six years, doubling the maximum seismic frequencies for our simulations every two to three years, from 0.5 Hertz (or cycles per second) in the TeraShake simulations, to 1.0 Hertz in the ShakeOut simulations, and now to 2.0 Hertz in this latest project," said Phil Maechling, SCEC's associate director for Information technology.

Specifically, the latest simulation is the largest in terms duration of the temblor (six minutes) and the geographical area covered - a rectangular volume approximately 500 miles (810km) long by 250 miles (405 km) wide, by 50 miles (85km) deep. The team's latest research also set a new record in the number of computer processor cores used, with more than 223,000 cores running within a single 24-hour period on the Jaguar Cray XT5 supercomputer at the Oak Ridge National Laboratory (ORNL) in Tennessee. By comparison, a previous TeraShake simulation in 2004 used only 240 cores over a four-day period. Additionally, the new simulation used a record 436 billion mesh or grid points to calculate the potential effect of such an earthquake, versus only 1.8 billion mesh points used in the TeraShake simulations done in 2004.

Large-scale earthquake simulations such as this a can be used to evaluate earthquake early warning planning systems, and help engineers, emergency response teams, and geophysicists better understand seismic hazards not just in California but around the world.

"Petascale simulations such as this one are needed to understand the rupture and wave dynamics of the largest earthquakes at shaking frequencies required to engineer safe structures," said Thomas Jordan, director of SCEC and Principal Investigator for the project. "Frankly, we were at the very limits of these new capabilities for research of this type."

In addition to Cui, Olsen, Jordan, and Maechling, other researchers on the

Scalable Earthquake Simulation on Petascale Supercomputers project include Amit Chourasia, Kwangyoon Lee, and Jun Zhou from SDSC; Daniel Roten and Steven M. Day from SDSU; Geoffrey Ely and Patrick Small from USC; D.K. Panda and his team from OSU; and John Levesque, from Cray Inc.

About SDSC

As an organized research unit of UC San Diego, SDSC is a national leader in creating and providing cyberinfrastructure for data-intensive research. Cyberinfrastructure refers to an accessible and integrated network of computer-based resources and expertise, focused on accelerating scientific inquiry and discovery. SDSC plans to build the high-performance computing community's first flash memory-based supercomputer system named

Gordon, to enter operation in 2011. SDSC is a founding member of TeraGrid, the nation's largest open-access scientific discovery infrastructure.

About SCEC

The Southern California Earthquake Center (SCEC) is a community of over 600 scientists, students, and others at more than 60 institutions worldwide, headquartered at the University of Southern California. SCEC is funded by the National Science Foundation and the U.S. Geological Survey to develop a comprehensive understanding of earthquakes in Southern California and elsewhere, and to communicate useful knowledge for reducing earthquake risk. SDSU is one of several core institutions involved with SCEC.

Comments:

Yifeng Cui, SDSC

(858) 822-0916 or

yfcui@sdsc.edu

Kim Olsen, SDSU

(619) 804-9253 or

kbolsen@sciences.sdsu.edu

Philip Maechling, SCEC

(213) 821-2491 or

maechlin@usc.edu.

Media Contacts:

Jan Zverina, SDSC Communications

858 534-5111 or

jzverina@sdsc.edu

Warren R. Froelich, SDSC Communications

858 822-3622 or

froelich@sdsc.edu

Mark Benthien, SCEC Communications

(213) 740-0323 or

benthien@usc.edu

Gina Jacobs, SDSU Communications

(619) 594-4563 or

gina.jacobs@sdsu.edu

Categories

Archive

Related Links

Southern California Earthquake Center

San Diego State University Department of Geological Sciences

Oak Ridge National Laboratory