News

SDSC's Trestles Provides Rapid Turnaround and Enhanced Performance for Diverse Researchers

Published July 13, 2011

|

The Trestles supercomputer, one of the newest systems at the San Diego Supercomputer Center (SDSC), UC San Diego. Ben Tolo, SDSC |

In the seven months since its launch, more than 50 separate research projects have been granted time on Trestles. As the system is targeted to serve a large number of users, project allocations are capped at 1.5M SUs (service units, or single processor hours) annually on the new SDSC system.

" Trestles was designed to enable modest-scale and gateway researchers to be as computationally productive as possible," said Richard Moore, SDSC's deputy director and co-principal investigator the system, the result of a $2.8 million National Science Foundation (NSF) award. "It has attracted researchers from diverse areas who need access to a fully supported supercomputer with shorter turnaround times than has been typical for most systems. In addition, to respond to user requirements for more flexible access modes, we have enabled pre-emptive on-demand queues for applications which require urgent access in response to unpredictable natural or manmade events that have a societal impact, as well as user-settable reservations for researchers who need predictable access for their workflows."

With 10,368 processor cores, a peak speed of 100 teraflop/s, 20 terabytes memory, and 39 terabytes of flash memory, Trestles is one of several new HPC (high-performance computing) systems at SDSC. The center is pioneering the use of flash-based memory, common in much smaller devices such as mobile phones and laptop computers but relatively new for supercomputers, which typically rely on slower spinning disk technology.

"Flash disks can read data as much as 100 times faster than spinning disk, write data faster, and are more energy-efficient and reliable," said Allan Snavely, associate director of SDSC and co-PI for the new system. " Trestles uses 120GB flash drives in each node, and users have already demonstrated substantial performance improvements for many applications compared to spinning disk."

Three recent research projects, each allocated one million SUs on Trestles, include:

Climate Change: How Aerosols Affect the Air We Breathe

|

A computer simulation illustrating the adsorption and subsequent dissociation of hydrogen chloride (HCl) on ice. New research suggests that the ice surface does not simply serve as a static platform on which reactants meet, but plays an active role in the reaction itself. The red and white links correspond respectively to the oxygen (O) and hydrogen (H) atoms of the water molecules of ice. The blue and white structure above corresponds to the chlorine (Cl) and hydrogen (H) atoms of HCl, respectively. Paesani Group, UC San Diego |

By influencing the amount of solar radiation that reaches the Earth's surface as well as the formation of clouds, researchers believe that aerosols play a major role in the atmosphere with important consequences for global climate and the ecosystem. Atmospheric aerosols are also associated with adverse effects on human health, notably in the areas of respiratory, cardiovascular, and allergic diseases.

Researchers lack a complete understanding of the properties of aerosols and the chemistry that takes place on these particles, according to Francesco Paesani, assistant professor of chemistry and biochemistry at UC San Diego. "This incomplete knowledge of aerosol chemistry currently limits the possibility of making quantitative predictions about the future state of the atmosphere," said Paesani, who as PI is conducting this research under a grant from the NSF's Environmental Chemical Science Division. Paesani and his research group are also part of the NSF Center for Aerosol Impacts on Climate and the Environment (CAICE) established at UC San Diego.

Using

Trestles as a resource, Paesani and his group have been developing computational methodologies to more accurately assess how particles in atmospheric aerosols directly affect air quality, and better understand their role in global climate concerns such as Earth's radiative balance and cloud formation. The Paesani group research interests focused on modeling chemical reactions on aerosol surfaces under atmospheric conditions, specifically the characterization of the molecular mechanisms that determine the dissociation of inorganic acids such as hydrogen chloride (HCl), nitric acid (HNO3) and sulfuric acid (H2SO4) on aqueous environments. They have been studying the dissociation of HCl in small water clusters as well as on ice surfaces under atmospheric temperature conditions.

HCl has been shown to play a critical role in chlorine activation reactions taking place at the surface of polar stratospheric clouds. Paesani's preliminary results indicate that the dissociation of HCl on ice surfaces is very rapid at all relevant atmospheric temperatures of 180 to 270 Kelvin (-135 to +25 degrees F). These results suggest that the ice surface does not simply serve as a static platform on which the reactants meet, but actually plays an active role in the reaction itself.

"Since our computational methodologies take into account the quantum nature of both electrons and nuclei using a 'first principles' approach, our molecular simulations are computationally highly expensive.," said Paesani, who recently published related research in the Journal of Physical Chemistry. "Having access to a powerful computing cluster such as Trestles has greatly benefited us."

Molecular Dynamics: Thermal Management in Microelectronics

|

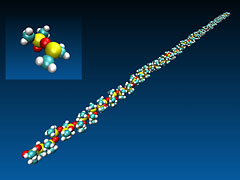

This computer simulation shows the atomic structure of a chain of polydimethylsiloxane (PDMS), a silicon-based polymer widely used in thermal management, which is a key issue in microelectronics. (inset: a fundamental unit consisting of PDMS chain.) T. Luo, MIT |

Tengfei Luo, with the Massachusetts Institute of Technology (MIT), specializes in the study of thermal management in microelectronics. His supercomputer simulations show that the intrinsic thermal conductivity of polymer chains, widely used in thermal management, strongly depends on their structural stiffness, and that surface morphology can greatly influence the efficiency of heat transfer across interfaces, or connections. By contrast, "soft" chains, or less structurally stiff ones, tend to scatter more heat carriers and results in low thermal conductivity.

"Our research is focused on studying heat transfer mechanisms in many different materials, and in particular we are providing new insights into the selection of thermally conductive polymers and better thermal transporting interfaces, essential to the ongoing miniaturization of microelectronics," said Luo.

"We found Trestles to be a very versatile system, on which we could run a large number of smaller jobs simultaneously or perform larger simulations of more than 512 cores involving tens of thousands of atoms," added Luo. "Unlike other systems, we were able to use Trestles to run jobs for as long as two weeks, which is a very valuable feature for studying thermal transport. Our I/O-intensive first-principle calculations greatly benefited from the system's local flash memory, while improving the stability of our calculations. Plus the large memory (64GB) on each node helped us significantly for memory-demanding FP calculations."

Luo's latest research related to thermal management was published in the April 2011 issue of the Journal of Applied Physics.

Astrophysics: High-Energy Collisions of Black Holes

|

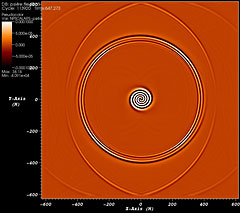

This computer simulation shows gravitational wave ripples generated during a high-energy collision of two black holes shot at each other at about 75% of the speed of light. In this configuration, the black holes zoom and whirl around each other before separating again. Losing so much energy in the form of gravitational waves that they are unable to escape each other's gravitational pull, they eventually merge, forming a single larger black hole. The X and Y axes measure the horizontal/vertical distances from the center of mass in units of the black holes' radius. U. Sperhake, CalTech/CSIC-IEEC |

Ulrich Sperhake, a theoretical astrophysics researcher with the California Institute of Technology (CalTech) as well as the Institute of Space Sciences at CSIC-IEEC, Barcelona, Spain, was recently allocated one million SUs on Trestles to further investigate high-energy collisions of black holes, with the goal of improving our understanding of the dynamics of these implosions.

"The purpose of our research is to identify the possibility of achieving numerically more robust and accurate simulations by using alternative formulations," said Sperhake, PI of the NSF- funded project, whose team has been studying black holes in three distinct areas of experimental and observational research: gravitational wave physics, Trans-Planckian Scattering, and high-energy physics. Sperhake plans to publish a detailed review of his numerical studies in those three areas. His most recent research was published in the May 20, 2011 issue of Physical Review D .

"Numerical simulations of black-hole binary space times in the framework of Einstein's theory of General Relativity, or generalizations thereof, require hundreds of processors and of the order of 100s of GB of memory," said Sperhake. "That is way outside the scope of workstations available locally, so the importance of having a computer cluster of the quality of Trestles cannot be overstated."

A TeraGrid/XSEDE Resource

Trestles is appropriately named because it is serving as a bridge between SDSC's current resources such as

Dash, and

Gordon, a much larger data-intensive system scheduled for deployment in late 2011/early 2012. Like

Dash and the upcoming

Gordon system,

Trestles is available to users of the NSF's TeraGrid, the nation's largest open-access scientific discovery infrastructure. TeraGrid is transitioning this summer to a new phase called XSEDE (Extreme Science and Engineering Discovery Environment), the result of a new multi-year NSF award.

Trestles is among the five largest supercomputers in the TeraGrid/XSEDE repertoire, and is backed by SDSC's Advanced User Support group, which has established key benchmarks to accelerate user applications and assist users in optimizing applications. Full details of the new system, including how to utilize on-demand access or user-settable reservations, can be found on the SDSC Trestles webpage.

About SDSC

As an Organized Research Unit of UC San Diego, SDSC is a national leader in creating and providing cyberinfrastructure for data-intensive research, and celebrated its 25th anniversary in late 2010 as one of the National Science Foundation's first supercomputer centers. Cyberinfrastructure refers to an accessible and integrated network of computer-based resources and expertise, focused on accelerating scientific inquiry and discovery.

Media Contacts:

Jan Zverina, SDSC Communications

858 534-5111 or

jzverina@sdsc.edu

Warren R. Froelich, SDSC Communications

858 822-3622 or

froelich@sdsc.edu

Categories

Archive

Related Links

UC San Diego: http://www.ucsd.edu/

National Science Foundation: http://www.nsf.gov/

TeraGrid: https://www.teragrid.org/

XSEDE: https://www.xsede.org

Paesani Group website: http://paesanigroup.ucsd.edu

Tengfei Luo, Journal of Applied Physics: http://jap.aip.org/resource/1/japiau/v109/i7/p074321_s1

Ulrich Sperhake, Physical Review D: http://prd.aps.org/abstract/PRD/v83/i10/e104041

Institute of Space Sciences (Barcelona): http://www.ice.csic.es/en/